Prompting Isn’t The Most Important Skill

It’s about the will to learn

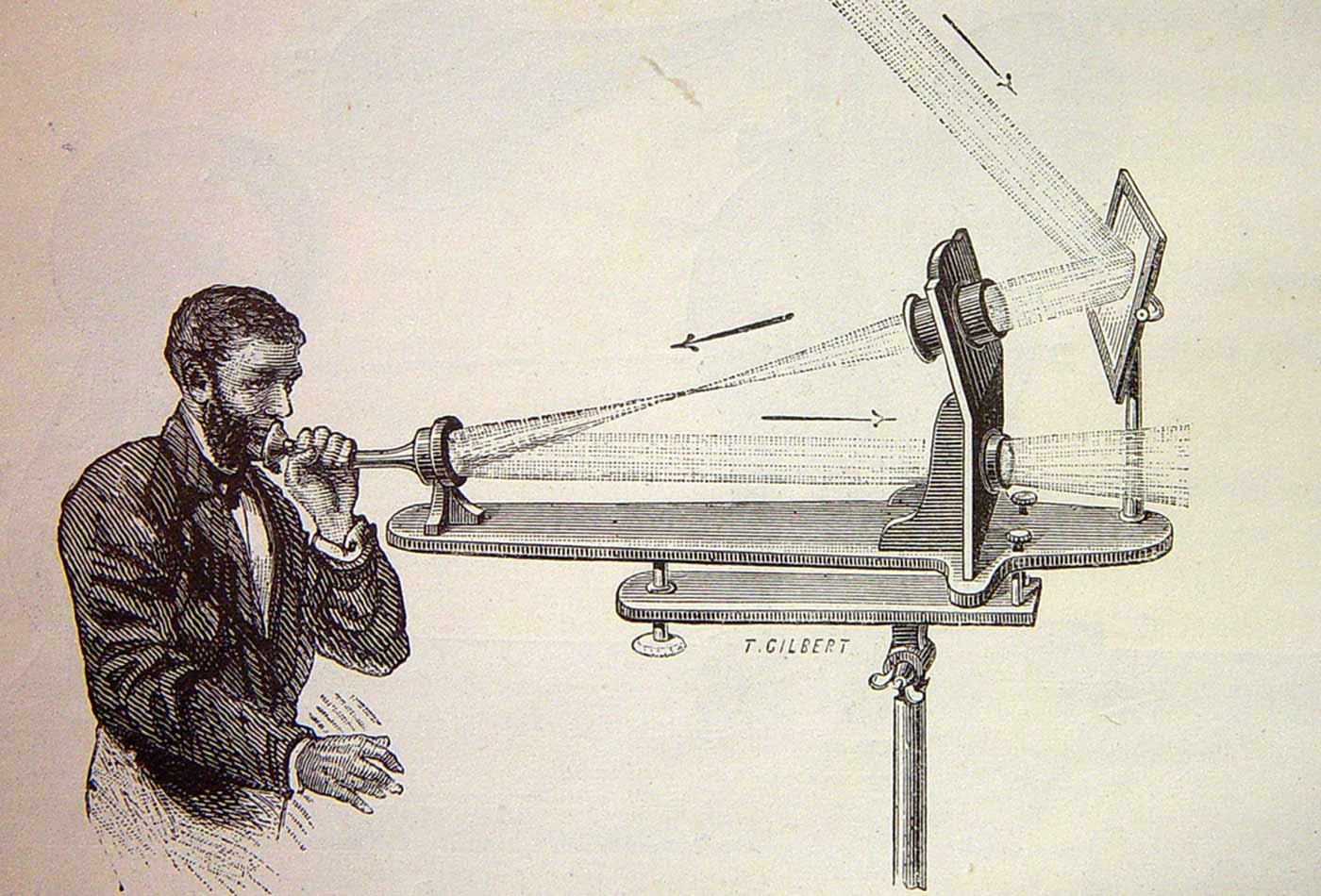

Illustration of the photophone's transmitter. (source: Wikimedia Commons)

Illustration of the photophone's transmitter. (source: Wikimedia Commons)

Anant Agarwal, an MIT professor and of the founders of the EdX educational platform, recently created a stir by saying that prompt engineering was the most important skill you could learn. And that you could learn the basics in two hours.

Although I agree that designing good prompts for AI is an important skill, Agarwal overstates his case. But before discussing why, it’s important to think about what prompt engineering means.

Attempts to define prompt engineering fall into two categories:

- Coming up with clever prompts to get an AI to do what you want while sitting at your laptop. This definition is essentially interactive. It’s arguable whether this should be called “engineering”; at this point, it’s more of an art than an applied science. This is probably the definition that Agarwal has in mind.

- Designing and writing software systems that generate prompts automatically. This definition isn’t interactive; it’s automating a task to make it easier for others to do. This work is increasingly falling under the rubric RAG (Retrieval Augmented Generation), in which a program takes a request, looks up data relevant to that request, and packages everything in a complex prompt.

Designing automated prompting systems is clearly important. It gives you much more control over what an AI is likely to do; if you package the information needed to answer a question into the prompt, and tell the AI to limit its response to information included in that package, it’s much less likely to “hallucinate.” But that’s a programming task that isn’t going to be learned in a couple of hours; it typically involves generating embeddings, using a vector database, then generating a chain of prompts that are answered by different systems, combining the answers, and possibly generating more prompts. Could the basics be learned in a couple of hours? Perhaps, if the learner is already an expert programmer, but that’s ambitious—and may require a definition of “basic” that sets a very low bar.

What about the first, interactive definition? It’s worth noting that all prompts are not created equal. Prompts for ChatGPT are essentially free-form text. Free-form text sounds simple, and it is simple at first. However, more detailed prompts can look like essays, and when you take them apart, you realize that they are essentially computer programs. They tell the computer what to do, even though they aren’t written in a formal computer language. Prompts for an image generation AI like Midjourney can include sections that are written in an almost-formal metalanguage that specifies requirements like resolution, aspect ratio, styles, coordinates, and more. It’s not programming as such, but creating a prompt that produces professional-quality output is much more like programming than “a tarsier fighting with a python.”

So, the first thing anyone needs to learn about prompting is that writing really good prompts is more difficult than it seems. Your first experience with ChatGPT is likely to be “Wow, this is amazing,” but unless you get better at telling the AI precisely what you want, your 20th experience is more likely to be “Wow, this is dull.”

Second, I wouldn’t debate the claim that anyone can learn the basics of writing good prompts in a couple of hours. Chain of thought (in which the prompt includes some examples showing how to solve a problem) isn’t difficult to grasp. Neither is including evidence for the AI to use as part of the prompt. Neither are many of the other patterns that create effective prompts. There’s surprisingly little magic here. But it’s important to take a step back and think about what chain of thought requires: you need to tell the AI how to solve your problem, step by step, which means that you first need to know how to solve your problem. You need to have (or create) other examples that the AI can follow. And you need to decide whether the output the AI generates is correct. In short, you need to know a lot about the problem you’re asking the AI to solve.

That’s why many teachers, particularly in the humanities, are excited about generative AI. When used well, it’s engaging and it encourages students to learn more: learning the right questions to ask, doing the hard research to track down facts, thinking through the logic of the AI’s response carefully, deciding whether or not that response makes sense in its context. Students writing prompts for AI need to think carefully about the points they want to make, how they want to make them, and what supporting facts to use. I’ve made a similar argument about the use of AI in programming. AI tools won’t eliminate programming, but they’ll put more stress on higher-level activities: understanding user requirements, understanding software design, understanding the relationship between components of a much larger system, and strategizing about how to solve a problem. (To say nothing of debugging and testing.) If generative AI helps us put to rest the idea that programming is about antisocial people grinding out lines of code, and helps us to realize that it’s really about humans understanding problems and thinking about how to solve them, the programming profession will be in a better place.

I wouldn’t hesitate to advise anyone to spend two hours learning the basics of writing good prompts—or 4 or 8 hours, for that matter. But the real lesson here is that prompting isn’t the most important thing you can learn. To be really good at prompting, you need to develop expertise in what the prompt is about. You need to become more expert in what you’re already doing—whether that’s programming, art, or humanities. You need to be engaged with the subject matter, not the AI. The AI is only a tool: a very good tool that does things that were unimaginable only a few years ago, but still a tool. If you give in to the seduction of thinking that AI is a repository of expertise and wisdom that a human couldn’t possibly obtain, you’ll never be able to use AI productively.

I wrote a PhD dissertation on late 18th and early 19th century English literature. I didn’t get that degree so that a computer could know everything about English Romanticism for me. I got it because I wanted to know. “Wanting to know” is exactly what it will take to write good prompts. In the long run, the will to learn something yourself will be much more important than a couple of hours training in effective prompting patterns. Using AI as a shortcut so that you don’t have to learn is a big step on the road to irrelevance. The “will to learn” is what will keep you and your job relevant in an age of AI.