Automated Mentoring with ChatGPT

AI can help you learn—but it needs to do a better job

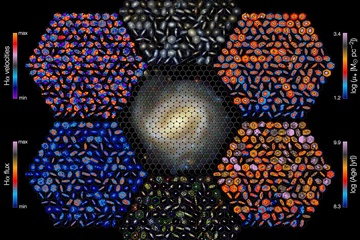

A composite of panels depicting maps of some of the properties of galaxies obtained from CALIFA data. (source: CALIFA collaboration on Wikimedia Commons)

A composite of panels depicting maps of some of the properties of galaxies obtained from CALIFA data. (source: CALIFA collaboration on Wikimedia Commons)

Ethan and Lilach Mollick’s paper Assigning AI: Seven Approaches for Students with Prompts explores seven ways to use AI in teaching. (While this paper is eminently readable, there is a non-academic version in Ethan Mollick’s Substack.) The article describes seven roles that an AI bot like ChatGPT might play in the education process: Mentor, Tutor, Coach, Student, Teammate, Student, Simulator, and Tool. For each role, it includes a detailed example of a prompt that can be used to implement that role, along with an example of a ChatGPT session using the prompt, risks of using the prompt, guidelines for teachers, instructions for students, and instructions to help teacher build their own prompts.

The Mentor role is particularly important to the work we do at O’Reilly in training people in new technical skills. Programming (like any other skill) isn’t just about learning the syntax and semantics of a programming language; it’s about learning to solve problems effectively. That requires a mentor; Tim O’Reilly has always said that our books should be like “someone wise and experienced looking over your shoulder and making recommendations.” So I decided to give the Mentor prompt a try on some short programs I’ve written. Here’s what I learned–not particularly about programming, but about ChatGPT and automated mentoring. I won’t reproduce the session (it was quite long). And I’ll say this now, and again at the end: what ChatGPT can do right now has limitations, but it will certainly get better, and it will probably get better quickly.

First, Ruby and Prime Numbers

I first tried a Ruby program I wrote about 10 years ago: a simple prime number sieve. Perhaps I’m obsessed with primes, but I chose this program because it’s relatively short, and because I haven’t touched it for years, so I was somewhat unfamiliar with how it worked. I started by pasting in the complete prompt from the article (it is long), answering ChatGPT’s preliminary questions about what I wanted to accomplish and my background, and pasting in the Ruby script.

ChatGPT responded with some fairly basic advice about following common Ruby naming conventions and avoiding inline comments (Rubyists used to think that code should be self-documenting. Unfortunately). It also made a point about a puts() method call within the program’s main loop. That’s interesting–the puts() was there for debugging, and I evidently forgot to take it out. It also made a useful point about security: while a prime number sieve raises few security issues, reading command line arguments directly from ARGV rather than using a library for parsing options could leave the program open to attack.

It also gave me a new version of the program with these changes made. Rewriting the program wasn’t appropriate: a mentor should comment and provide advice, but shouldn’t rewrite your work. That should be up to the learner. However, it isn’t a serious problem. Preventing this rewrite is as simple as just adding “Do not rewrite the program” to the prompt.

Second Try: Python and Data in Spreadsheets

My next experiment was with a short Python program that used the Pandas library to analyze survey data stored in an Excel spreadsheet. This program had a few problems–as we’ll see.

ChatGPT’s Python mentoring didn’t differ much from Ruby: it suggested some stylistic changes, such as using snake-case variable names, using f-strings (I don’t know why I didn’t; they’re one of my favorite features), encapsulating more of the program’s logic in functions, and adding some exception checking to catch possible errors in the Excel input file. It also objected to my use of “No Answer” to fill empty cells. (Pandas normally converts empty cells to NaN, “not a number,” and they’re frustratingly hard to deal with.) Useful feedback, though hardly earthshaking. It would be hard to argue against any of this advice, but at the same time, there’s nothing I would consider particularly insightful. If I were a student, I’d soon get frustrated after two or three programs yielded similar responses.

Of course, if my Python really was that good, maybe I only needed a few cursory comments about programming style–but my program wasn’t that good. So I decided to push ChatGPT a little harder. First, I told it that I suspected the program could be simplified by using the dataframe.groupby() function in the Pandas library. (I rarely use groupby(), for no good reason.) ChatGPT agreed–and while it’s nice to have a supercomputer agree with you, this is hardly a radical suggestion. It’s a suggestion I would have expected from a mentor who had used Python and Pandas to work with data. I had to make the suggestion myself.

ChatGPT obligingly rewrote the code–again, I probably should have told it not to. The resulting code looked reasonable, though it made a not-so-subtle change in the program’s behavior: it filtered out the “No answer” rows after computing percentages, rather than before. It’s important to watch out for minor changes like this when asking ChatGPT to help with programming. Such minor changes happen frequently, they look innocuous, but they can change the output. (A rigorous test suite would have helped.) This was an important lesson: you really can’t assume that anything ChatGPT does is correct. Even if it’s syntactically correct, even if it runs without error messages, ChatGPT can introduce changes that lead to errors. Testing has always been important (and under-utilized); with ChatGPT, it’s even more so.

Now for the next test. I accidentally omitted the final lines of my program, which made a number of graphs using Python’s matplotlib library. While this omission didn’t affect the data analysis (it printed the results on the terminal), several lines of code arranged the data in a way that was convenient for the graphing functions. These lines of code were now a kind of “dead code”: code that is executed, but that has no effect on the result. Again, I would have expected a human mentor to be all over this. I would have expected them to say “Look at the data structure graph_data. Where is that data used? If it isn’t used, why is it there?” I didn’t get that kind of help. A mentor who doesn’t point out problems in the code isn’t much of a mentor.

So my next prompt asked for suggestions about cleaning up the dead code. ChatGPT praised me for my insight and agreed that removing dead code was a good idea. But again, I don’t want a mentor to praise me for having good ideas; I want a mentor to notice what I should have noticed, but didn’t. I want a mentor to teach me to watch out for common programming errors, and that source code inevitably degrades over time if you’re not careful–even as it’s improved and restructured.

ChatGPT also rewrote my program yet again. This final rewrite was incorrect–this version didn’t work. (It might have done better if I had been using Code Interpreter, though Code Interpreter is no guarantee of correctness.) That both is, and is not, an issue. It’s yet another reminder that, if correctness is a criterion, you have to check and test everything ChatGPT generates carefully. But–in the context of mentoring–I should have written a prompt that suppressed code generation; rewriting your program isn’t the mentor’s job. Furthermore, I don’t think it’s a terrible problem if a mentor occasionally gives you poor advice. We’re all human (at least, most of us). That’s part of the learning experience. And it’s important for us to find applications for AI where errors are tolerable.

So, what’s the score?

- ChatGPT is good at giving basic advice. But anyone who’s serious about learning will soon want advice that goes beyond the basics.

- ChatGPT can recognize when the user makes good suggestions that go beyond simple generalities, but is unable to make those suggestions itself. This happened twice: when I had to ask it about

groupby(), and when I asked it about cleaning up the dead code. - Ideally, a mentor shouldn’t generate code. That can be fixed easily. However, if you want ChatGPT to generate code implementing its suggestions, you have to check carefully for errors, some of which may be subtle changes in program’s behavior.

Not There Yet

Mentoring is an important application for language models, not the least because it finesses one of their biggest problems, their tendency to make mistakes and create errors. A mentor that occasionally makes a bad suggestion isn’t really a problem; following the suggestion and discovering that it’s a dead end is an important learning experience in itself. You shouldn’t believe everything you hear, even if it comes from a reliable source. And a mentor really has no business generating code, incorrect or otherwise.

I’m more concerned about ChatGPT’s difficulty in providing advice that’s truly insightful, the kind of advice that you really want from a mentor. It is able to provide advice when you ask it about specific problems–but that’s not enough. A mentor needs to help a student explore problems; a student who is already aware of the problem is well on their way towards solving it, and may not need the mentor at all.

ChatGPT and other language models will inevitably improve, and their ability to act as a mentor will be important to people who are building new kinds of learning experiences. But they haven’t arrived yet. For the time being, if you want a mentor, you’re on your own.