The ChatGPT Surge

Why did searches for ChatGPT decline sharply in June and July?

Network (source: Pixabay)

Network (source: Pixabay)

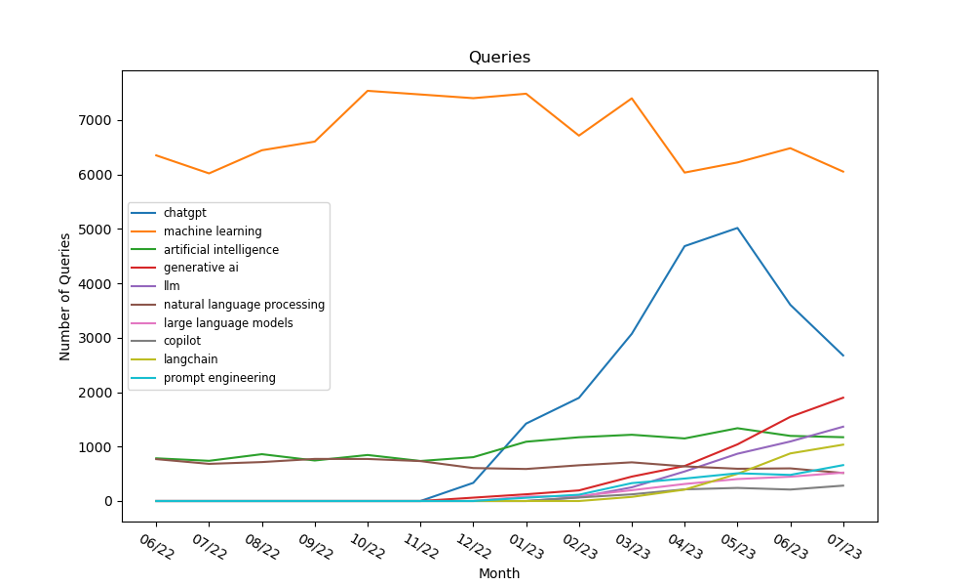

I’m sure that nobody will be surprised that the number of searches for ChatGPT on the O’Reilly learning platform skyrocketed after its release in November, 2022. It might be a surprise how quickly it got to the top of our charts: it peaked in May as the 6th most common search query. Then it dropped almost as quickly: it dropped back to #8 in June, and fell further to #19 in July. At its peak, ChatGPT was in very exclusive company: it’s not quite on the level of Python, Kubernetes, and Java, but it’s in the mix with AWS and React, and significantly ahead of Docker.

A look at the number of searches for terms commonly associated with AI shows how dramatic this rise was:

ChatGPT came from nowhere to top all the AI search terms except for Machine Learning itself, which is consistently our #3 search term—and, despite ChatGPT’s dramatic decline in June and July, it’s still ahead of all other search terms relevant to AI. The number of searches for Machine Learning itself held steady, though it arguably declined slightly when ChatGPT appeared. What’s more interesting, though, is that the search term “Generative AI” suddenly emerged from the pack as the third most popular search term. If current trends continue, in August we might see more searches for Generative AI than for ChatGPT.

What can we make of this? Everyone knows that ChatGPT had one of the most successful launches of any software project, passing a million users in its first five days. (Since then, it’s been beaten by Facebook’s Threads, though that’s not really a fair comparison.) There are plenty of reasons for this surge. Talking computers have been a science fiction dream since well before Star Trek—by itself, that’s a good reason for the public’s fascination. ChatGPT might simplify common tasks, from doing research to writing essays to basic programming, so many people want to use it to save labor—though getting it to do quality work is more difficult than it seems at first glance. (We’ll leave the issue of whether this is “cheating” to the users, their teachers, and their employers.) And, while I’ve written frequently about how ChatGPT will change programming, it will undoubtedly have an even greater effect on non-programmers. It will give them the chance to tell computers what to do without programming; it’s the ultimate “low code” experience.

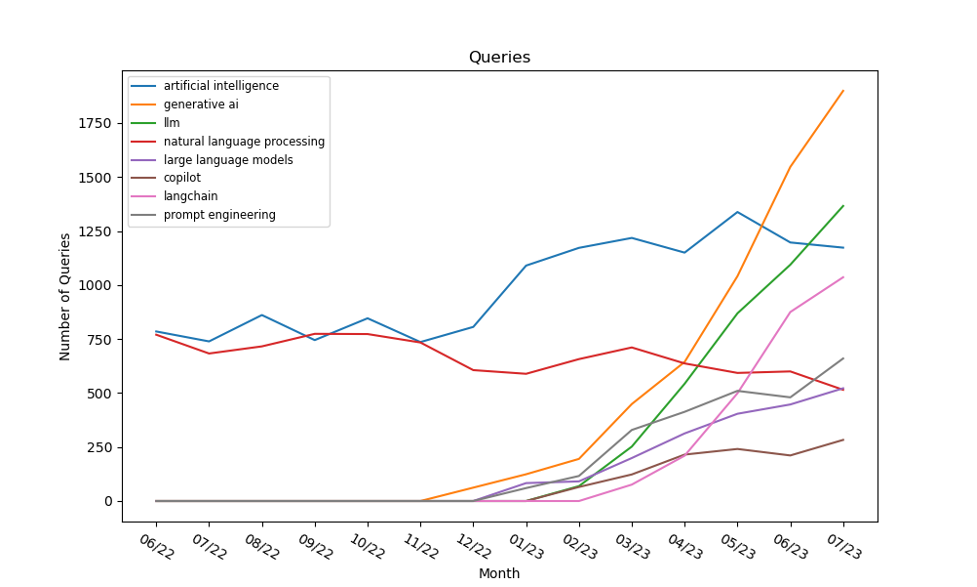

So there are plenty of reasons for ChatGPT to surge. What about other search terms? It’s easy to dismiss these search queries as also-rans, but they were all in the top 300 for May, 2023—and we typically have a few million unique search terms per month. Removing ChatGPT and Machine Learning from the previous graph makes it easier to see trends in the other popular search terms:

It’s mostly “up and to the right.” Three search terms stand out: Generative AI, LLM, and Langchain all follow similar curves: they start off with relatively moderate growth that suddenly becomes much steeper in February, 2023. We’ve already noted that the number of searches for Generative AI increased sharply since the release of ChatGPT, and haven’t declined in the past two months. Our users evidently prefer LLM to spelling out “Large Language Models,” but if you add these two search terms together, the total number of searches for July is within 1% of Generative AI. This surge didn’t really start until last November, when it was spurred by the appearance of ChatGPT—even though search terms like LLM were already in circulation because of GPT-3, DALL-E, StableDiffusion, Midjourney, and other language-based generative AI tools.

Unlike LLMs, Langchain didn’t exist prior to ChatGPT—but once it appeared, the number of searches took off rapidly, and didn’t decline in June and July. That makes sense; although it’s still early, Langchain looks like it will be the cornerstone of LLM-based software development. It’s a widely used platform for building applications that generate queries programmatically and that connects LLMs with each other, with databases, and with other software. Langchain is frequently used to look up relevant articles that weren’t in ChatGPT’s training data and package them as part of a lengthy prompt.

In this group, the only search term that seems to be in a decline is Natural Language Processing. Although large language models clearly fall into the category of NLP, we suspect that most users associate NLP with older approaches to building chatbots. Searches for Artificial Intelligence appear to be holding their own, though it’s surprising that there are so few searches for AI compared to Machine Learning. The difference stems from O’Reilly’s audience, which is relatively technical and prefers the more precise term Machine Learning. Nevertheless, the number of searches for AI rose with the release of ChatGPT, possibly because ChatGPT’s appeal wasn’t limited to the technical community.

Now that we’ve run through the data, we’re left with the big question: What happened to ChatGPT? Why did it decline from roughly 5,000 searches to slightly over 2,500 in a period of two months? There are many possible reasons. Perhaps students stopped using ChatGPT for homework assignments as graduation and summer vacation approached. Perhaps ChatGPT has saturated the world; people know what they need to know, and are waiting for the next blockbuster. An article in Ars Technica notes that ChatGPT usage declined from May to June, and suggests many possible causes, including attention to the Twitter/Threads drama and frustration because OpenAI implemented stricter guardrails to prevent abuse. It would be unfortunate if ChatGPT usage is declining because people can’t use it to generate abusive content, but that’s a different article…

A more important reason for this decline might be that ChatGPT is no longer the only game in town. There are now many alternative language models. Most of these alternatives descend from Meta’s LLaMA and Georgi Gerganov’s llama.cpp (which can run on laptops, cell phones, and even Raspberry Pi). Users can train these models to do whatever they want. Some of these models already have chat interfaces, and all of them could support chat interfaces with some fairly simple programming. None of these alternatives generate significant search traffic at O’Reilly, but that doesn’t mean that they won’t in the future, or that they aren’t an important part of the ecosystem. Their proliferation is an important piece of evidence about what’s happening among O’Reilly’s users. AI developers now need to ask a question that didn’t even exist last November: should they build on large foundation models like ChatGPT or Google’s Bard, using public APIs and paying by the token? Or should they start with an open source model that can run locally and be trained for their specific application?

This last explanation makes a lot of sense in context. We’ve moved beyond the initial phase, when ChatGPT was a fascinating toy. We’re now building applications and incorporating language models into products, so trends in search terms have shifted accordingly. A developer interested in building with large language models needs more context; learning about ChatGPT by itself isn’t enough. Developers who want to learn about language models need different kinds of information, information that’s both deeper and broader. They need to learn about how generative AI works, about new LLMs, about programming with Langchain and other platforms. All of these search terms increased while ChatGPT declined. Now that there are options, and now that everyone has had a chance to try out ChatGPT, the first step in an AI project isn’t to search for ChatGPT. It’s to get a sense of the landscape, to discover the possibilities.

Searches for ChatGPT peaked quickly, and are now declining rapidly—and who knows what August and September will bring? (We wouldn’t be surprised to see ChatGPT bounce back as students return to school and homework assignments.) The real news is that ChatGPT is no longer the whole story: you can’t look at the decline in ChatGPT without also considering what else our users are searching for as they start building AI into other projects. Large language models are very clearly part of the future. They will change the way we work and live, and we’re just at the start of the revolution.